Rate Limiting in APIs: Protecting Your Backend from Overload

APIs are the backbone of modern applications, powering everything from social media integrations to payment gateways. However, if left unprotected, they can be exploited resulting in Denial of Service (DoS) attacks, server overload, and unexpected costs. One of the most effective techniques to defend against these issues is Rate Limiting.

What is Rate Limiting?

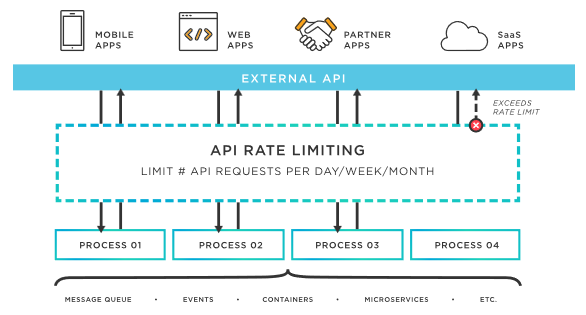

API rate limiting is the process of controlling how many requests a user or system can make to an API within a given timeframe. For example, an API might allow only 100 requests per minute per user. If a user exceeds this limit, the API will return an error (like 429 Too Many Requests) until the timeframe resets.

This mechanism helps prevent:

- Brute force attacks – e.g., credential stuffing by rapidly trying multiple username/password combinations.

- API abuse & scraping – stopping bots or scrapers from exhausting resources meant for real users.

- Server overload during traffic spikes – ensuring stability by throttling excessive requests during peak hours.

- Unexpected cloud costs – avoiding unnecessary expenses when services are billed per request.

Why Rate Limiting Matters in API Design

Many Web API design patterns and best practices have been proposed to improve performance and reliability. Among them, the Rate Limit pattern plays a crucial role in preventing excessive usage of resources by specific clients. By enforcing limits, API providers can

- Avoid overwhelming backend systems.

- Maintain availability and performance.

- Enhance security-related properties like resilience against denial-of-service attacks.

However, rate limiting is not always standardized. Different providers adopt different strategies, configuration options, and even terminology. This inconsistency can

- Make it hard for developers to understand API rate limit policies.

- Lead to accidental breaches of thresholds, causing client applications to fail.

- Complicate enforcement in cloud-based systems that don’t natively support rate limiting.

In such cases, API providers must rely on manual implementations or specialized tools to enforce limits and defend their systems against abusive behavior.

Common Rate Limiting Algorithms

Different algorithms are used to enforce rate limiting, each with trade-offs between accuracy, efficiency, and fairness.

Fixed Window Counter

- Requests are counted within fixed time intervals (e.g., 1 minute).

- Example: Allow 100 requests per minute.

- Pros: Simple, easy to implement.

- Cons: Bursts at boundary (e.g., 100 requests at 0:59 and another 100 at 1:01).

Sliding Window Log

- Stores timestamps of each request in a log.

- Allows requests as long as they don’t exceed the limit in the last N seconds.

- Pros: More accurate, no burst problem.

- Cons: Requires storing many timestamps → higher memory usage.

Sliding Window Counter

- Uses smaller sub-windows to approximate limits.

- Example: 100 requests per minute → track requests in 6 sub-windows of 10 seconds each.

- Pros: Balances accuracy and memory efficiency.

- Cons: Slight approximation, but good enough for most APIs.

Token Bucket

- Clients earn tokens at a fixed rate (e.g., 1 token per second).

- Each request consumes a token.

- If no tokens remain → request is denied.

- Pros: Supports bursts while maintaining average rate.

- Cons: More complex to implement.

Best Practices for Rate Limiting APIs

- Set Smart Limits

- Differentiate between endpoints — for example,

/loginshould have stricter limits than/documentation. - Vary limits by user type (SLA) — e.g., free vs. paid tiers

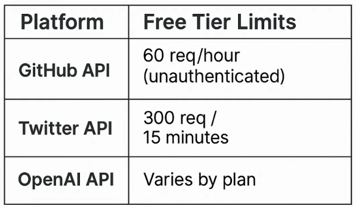

- Real world API rate limits

- Differentiate between endpoints — for example,

- Use Multiple Rate Limiting Layers

- Global rate limits — enforce limits across your website/backend per IP, visitor, or API key.

- Endpoint-specific limits — apply stricter throttling for sensitive endpoints like

/checkoutand/login.

- Return Clear Rate Limit Headers , Include headers such as

X-RateLimit-Limit: 100

X-RateLimit-Remaining: 42

X-RateLimit-Reset: 60 - Handle Rate Limit Exceeded Gracefully

- Return HTTP 429 (Too Many Requests) with a

Retry-Afterheader. - Log and monitor excessive requests for potential abuse or security analysis.

- Return HTTP 429 (Too Many Requests) with a

- Distribute Rate Limiting in Microservices

- Use Redis or distributed caching for consistency across servers.

- Implement tools like Kong, NGINX, or Envoy to enforce global limits.

- Monitor & Adjust Limits

- Track API usage with tools like Prometheus, Grafana, or CloudWatch.

- Continuously adjust limits based on real traffic patterns.

Rate Limiting in .NET Core Using Custom Middleware

CREATE THE MIDDLEWARE

using Microsoft.AspNetCore.Http;

using System.Collections.Concurrent;

using System.Threading.RateLimiting;

using System.Threading.Tasks;

public class RateLimitingMiddleware

{

private readonly RequestDelegate _next;

// Store request counts per IP

private static ConcurrentDictionary<string, (int Count, DateTime Timestamp)> _requests

= new ConcurrentDictionary<string, (int, DateTime)>();

private readonly int _limit = 5; // Max requests

private readonly int _windowSeconds = 10; // Time window (seconds)

public RateLimitingMiddleware(RequestDelegate next)

{

_next = next;

}

public async Task InvokeAsync(HttpContext context)

{

var ip = context.Connection.RemoteIpAddress?.ToString() ?? "unknown";

var now = DateTime.UtcNow;

var entry = _requests.GetOrAdd(ip, _ => (0, now));

if ((now - entry.Timestamp).TotalSeconds > _windowSeconds)

{

// Reset window

entry = (0, now);

}

if (entry.Count >= _limit)

{

context.Response.StatusCode = StatusCodes.Status429TooManyRequests;

await context.Response.WriteAsync("Too many requests. Try again later.");

return;

}

_requests[ip] = (entry.Count + 1, entry.Timestamp);

await _next(context);

}

}REGISTER MIDDLEWARE IN Program.cs

Open Program.cs and add the middleware before app.MapControllers();

app.UseMiddleware<RateLimitingMiddleware>();Conclusion

Implementing rate limiting in your APIs is a crucial step to protect your backend from abuse, prevent server overload, and ensure fair usage among clients. With .NET Core and .NET 8, creating a custom middleware gives developers full control over how limits are enforced — whether per IP, API key, or endpoint.

In this blog, we walked through:

- Why rate limiting matters for API security and performance

- A practical middleware example to enforce request limits

- How to test and handle 429 Too Many Requests responses

While this approach works well for single-server deployments, for production-ready applications, consider using distributed caches like Redis or the built-in .NET 8 Rate Limiting Middleware to handle multiple instances and more advanced policies.

By following these steps, you can make your APIs more secure, resilient, and predictable, providing a better experience for both your users and your infrastructure.