Mastering Docker: A Deep Dive into Containers, Images, and the Docker Daemon

Docker has transformed the way we build, deploy, and manage applications, enabling developers to create lightweight, portable environments that work seamlessly across different systems. Whether you’re dealing with microservices, testing environments, or production deployments, Docker can simplify workflows, reduce resource usage, and make your applications more robust. This post delves into Docker’s core concepts, key benefits, essential commands, and advanced use cases to help you make the most of containerization.

What Is Docker and Why Is It Essential?

Docker is an open-source platform designed to automate the deployment of applications inside isolated containers. These containers include everything an application needs to run—code, runtime, libraries, and configurations—packaged into a single unit that’s easy to deploy and consistent across environments. Docker containers are much more efficient than virtual machines, as they share the host OS kernel, reducing resource overhead.

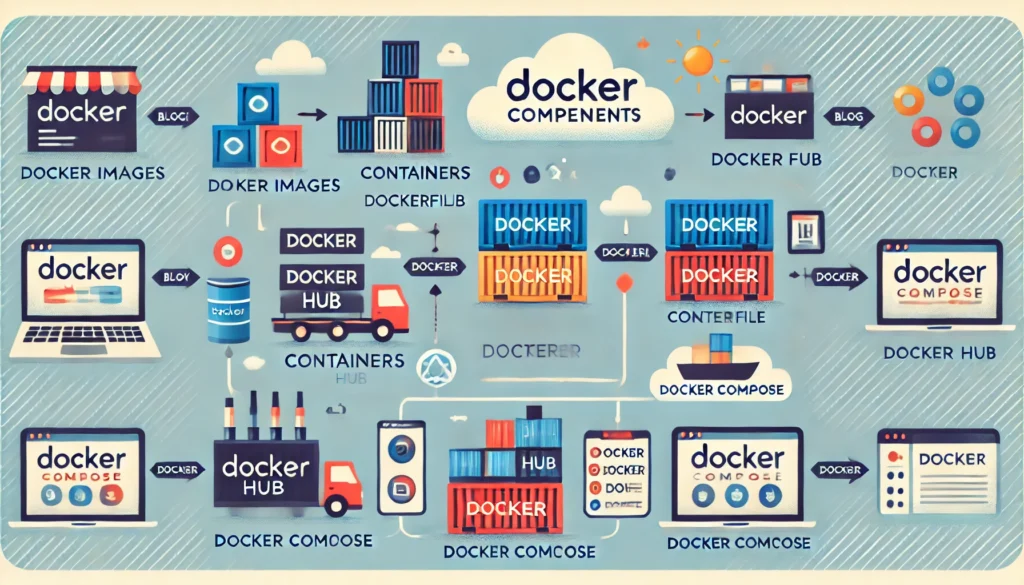

Key Components of Docker

Understanding Docker requires a look at its foundational components:

- Docker Images: Images are like blueprints for Docker containers. They define what the container will include and how it will behave. Images are immutable and consist of multiple layers, which allows Docker to efficiently reuse parts of images across containers.

- Docker Containers: Containers are instances of images. They are isolated, lightweight environments that run the specified application and its dependencies without interfering with other containers or the host OS.

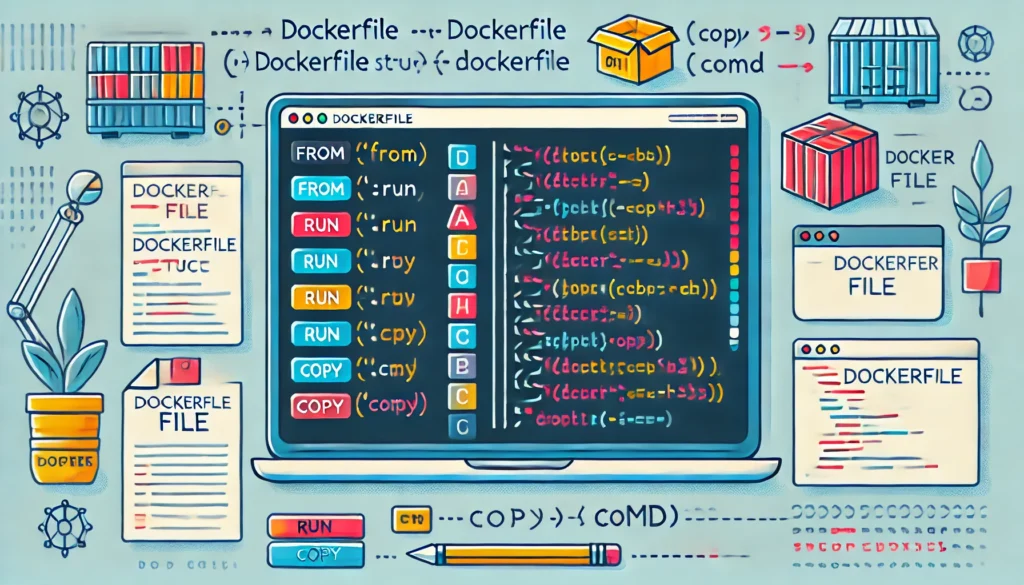

- Dockerfile: A Dockerfile is a script that contains a series of instructions for building a Docker image. It allows you to define the base OS, install dependencies, copy files, set environment variables, and more.

- Docker Hub: Docker Hub is a cloud-based registry where developers can share and distribute Docker images. You can find thousands of images on Docker Hub, including official images for popular technologies like Node.js, PostgreSQL, and Nginx.

- Docker Compose: Compose is a tool for defining and running multi-container Docker applications. With Compose, you can manage complex applications by defining each service (e.g., database, backend, frontend) in a single YAML file.

Key Benefits of Docker

Here’s why Docker has become a go-to solution for developers and DevOps engineers alike:

- Consistent Environments: Docker containers guarantee a consistent environment from development to production, minimizing issues caused by differences in dependencies or configurations across platforms.

- Enhanced Portability: Since Docker containers package everything an application needs to run, they can be easily deployed on any system with Docker installed. This portability is invaluable for cloud deployments, where containers can run seamlessly on AWS, Google Cloud, Azure, or any other cloud service.

- Resource Efficiency: Unlike virtual machines, which need a separate OS instance, Docker containers share the host OS kernel, making them lightweight and allowing for higher density on the same hardware.

- Rapid Deployment and Scaling: Docker containers are designed to start quickly, making them ideal for scaling applications horizontally. Orchestrators like Kubernetes can automatically scale Docker containers based on demand, making it easy to adapt to workload changes.

- Isolation and Security: Each Docker container runs in an isolated environment, which reduces the risk of conflicts between applications and adds a layer of security. Docker also supports creating secure networks, managing secrets, and setting up resource limits for containers.

Real-World Applications of Docker

Docker’s flexibility makes it a powerful tool for a variety of use cases. Here are some practical ways it’s commonly used in the industry:

1. Microservices Architecture

Microservices architecture breaks applications into independent, modular services that can be developed, deployed, and scaled separately. Docker containers are ideal for microservices because each service can be packaged with its own dependencies and runtime, avoiding conflicts with other services. Docker Compose is useful for managing these multi-container environments, while Kubernetes is often used to orchestrate and scale microservices in production.

2. Continuous Integration and Continuous Deployment (CI/CD)

Docker can streamline CI/CD pipelines by providing a consistent testing environment. Developers can create containers that replicate production environments, ensuring that code changes are thoroughly tested under conditions identical to the live system. Many CI/CD tools, like Jenkins, GitLab CI/CD, and CircleCI, support Docker integration, allowing you to automate the testing and deployment of Dockerized applications.

3. Development and Testing Environments

Docker makes it easy to set up isolated development environments that mirror production. Instead of installing dependencies locally, developers can run containers that include all the necessary software. This helps prevent the “works on my machine” problem by ensuring every team member works in an identical environment. Using Docker Compose, developers can also manage complex environments that require multiple services like databases, cache servers, and messaging queues.

4. Legacy Application Modernization

Many organizations use Docker to modernize legacy applications, allowing them to run on modern infrastructure without significant code changes. By containerizing these applications, companies can achieve better resource utilization, facilitate cloud migration, and ensure consistency across environments.

5. Batch Processing and Data Processing

For data-intensive tasks, such as batch processing, data analytics, and machine learning, Docker containers offer an isolated environment to run resource-hungry tasks on powerful cloud instances. Docker containers ensure all dependencies and configurations are consistent, which is critical in data processing pipelines that involve multiple stages and tools.

Advanced Docker Concepts and Tools

Docker’s flexibility makes it a powerful tool for a variety of use cases. Here are some practical ways it’s commonly used in the industry:

1. Microservices Architecture

Microservices architecture breaks applications into independent, modular services that can be developed, deployed, and scaled separately. Docker containers are ideal for microservices because each service can be packaged with its own dependencies and runtime, avoiding conflicts with other services. Docker Compose is useful for managing these multi-container environments, while Kubernetes is often used to orchestrate and scale microservices in production.

2. Continuous Integration and Continuous Deployment (CI/CD)

Docker can streamline CI/CD pipelines by providing a consistent testing environment. Developers can create containers that replicate production environments, ensuring that code changes are thoroughly tested under conditions identical to the live system. Many CI/CD tools, like Jenkins, GitLab CI/CD, and CircleCI, support Docker integration, allowing you to automate testing and deployment of Dockerized applications.

3. Development and Testing Environments

Docker makes it easy to set up isolated development environments that mirror production. Instead of installing dependencies locally, developers can run containers that include all the necessary software. This helps prevent the “works on my machine” problem by ensuring every team member works in an identical environment. Using Docker Compose, developers can also manage complex environments that require multiple services like databases, cache servers, and messaging queues.

4. Legacy Application Modernization

Many organizations use Docker to modernize legacy applications, allowing them to run on modern infrastructure without significant code changes. By containerizing these applications, companies can achieve better resource utilization, facilitate cloud migration, and ensure consistency across environments.

5. Batch Processing and Data Processing

For data-intensive tasks, such as batch processing, data analytics, and machine learning, Docker containers offer an isolated environment to run resource-hungry tasks on powerful cloud instances. Docker containers ensure all dependencies and configurations are consistent, which is critical in data processing pipelines that involve multiple stages and tools.

Advanced Docker Concepts and Tools

Once you’re comfortable with Docker basics, there are a few advanced tools and techniques that can take your Docker experience to the next level:

1. Docker Compose for Multi-Container Applications

Docker Compose simplifies multi-container applications by allowing you to define services, networks, and volumes in a single docker-compose.yml file. You can use Compose to manage complex applications that require multiple services to work together (e.g., a web server, database, and cache). Here’s a sample docker-compose.yml file for a web app with a database:

version: '3'

services:

web:

image: nginx

ports:

- "80:80"

depends_on:

- db

db:

image: postgres

environment:

POSTGRES_USER: user

POSTGRES_PASSWORD: password

2. Docker Swarm for Container Orchestration

Docker Swarm is Docker’s native clustering and orchestration tool. It allows you to manage a cluster of Docker nodes and deploy services across multiple hosts. Swarm is useful for production deployments where you need high availability and automatic load balancing for containerized applications.

3. Networking and Security

Docker offers a variety of networking options, such as bridge networks, host networks, and overlay networks. These networks enable containers to communicate securely within the same network. Docker also provides options for configuring firewall rules, managing secrets, and limiting resources to enhance container security.

4. Using Docker with Kubernetes

Kubernetes is an industry-standard tool for orchestrating Docker containers at scale. It provides advanced features like automated scaling, rolling updates, self-healing, and resource management, making it ideal for managing complex, production-grade applications. If you’re running applications in a distributed environment, Kubernetes offers more features than Docker Swarm and is widely supported by cloud providers.

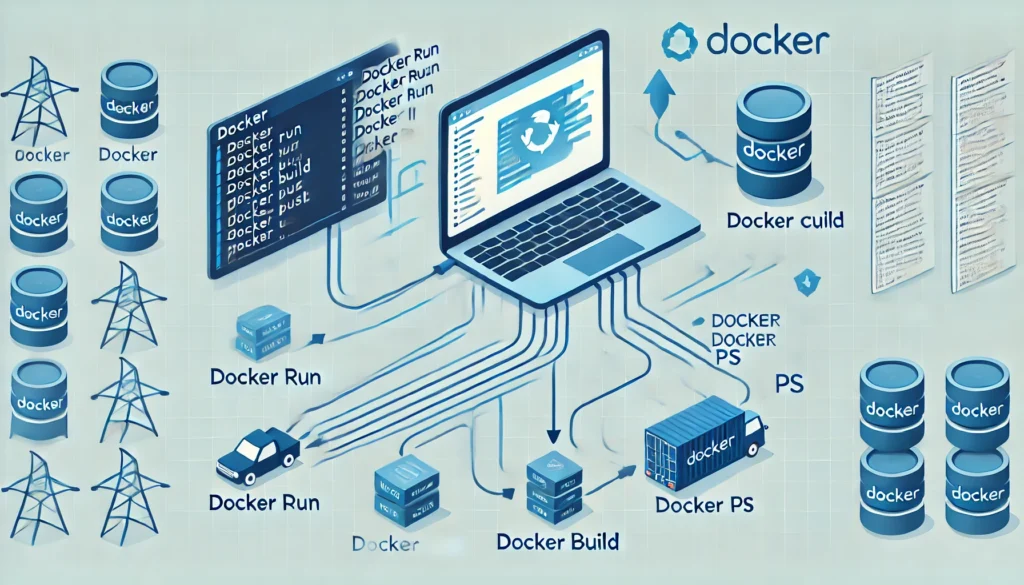

Essential Docker Commands for Day-to-Day Usage

Here’s a quick reference for essential Docker commands to help you get started and manage containers efficiently:

- Run a Container:

docker run -d -p 8080:80 nginxRuns the Nginx container in detached mode (-d) and maps port 8080 to the container’s port 80.

- List Containers:

docker ps -aShows all running and stopped containers.

- Stop a Container:

docker stop <container_id>- Remove a Container:

docker rm <container_id>- Build an Image from a Dockerfile:

docker build -t my-app .- Push an Image to Docker Hub:

docker push myusername/my-app- Using Docker Compose:

docker-compose up -dStarts all services defined in docker-compose.yml in detached mode.

Understanding the Docker Client

The Docker Client is a crucial part of Docker’s architecture, acting as the primary way for users to interact with Docker. It serves as a command-line tool (docker) that communicates with the Docker daemon, making it possible to manage Docker objects like images, containers, networks, and volumes. Below is an in-depth look at what the Docker Client does, how it works, and why it’s essential.

What is the Docker Client?

The Docker Client is a command-line interface (CLI) that enables you to issue commands to the Docker daemon. Every command you type in the CLI, such as docker run, docker pull, or docker build, is processed by the Docker Client and sent to the Docker daemon, which carries out the instructions.

Key Features of the Docker Client

- Simple Command Syntax: Docker Client commands follow a simple syntax, typically starting with

dockerfollowed by an action (run,build,pull, etc.). This makes Docker accessible, even to beginners, and allows for fast and efficient management of Docker resources. - Communication with Docker Daemon: The Docker Client uses a REST API to communicate with the Docker daemon, which is responsible for the actual creation, management, and removal of containers and images. This separation between the Client and daemon ensures modularity and allows Docker to run in distributed setups.

- Scripting and Automation: Because the Docker Client is a CLI, it integrates well with scripts and automation tools. This allows users to automate repetitive tasks, like building or deploying containers, making Docker ideal for CI/CD pipelines.

Why the Docker Client is Essential

The Docker Client is essential because it acts as the main entry point for users to interact with Docker. It provides a straightforward way to issue commands and get instant feedback. The Client simplifies complex tasks like container management and orchestration, making Docker more approachable for developers and system administrators alike.

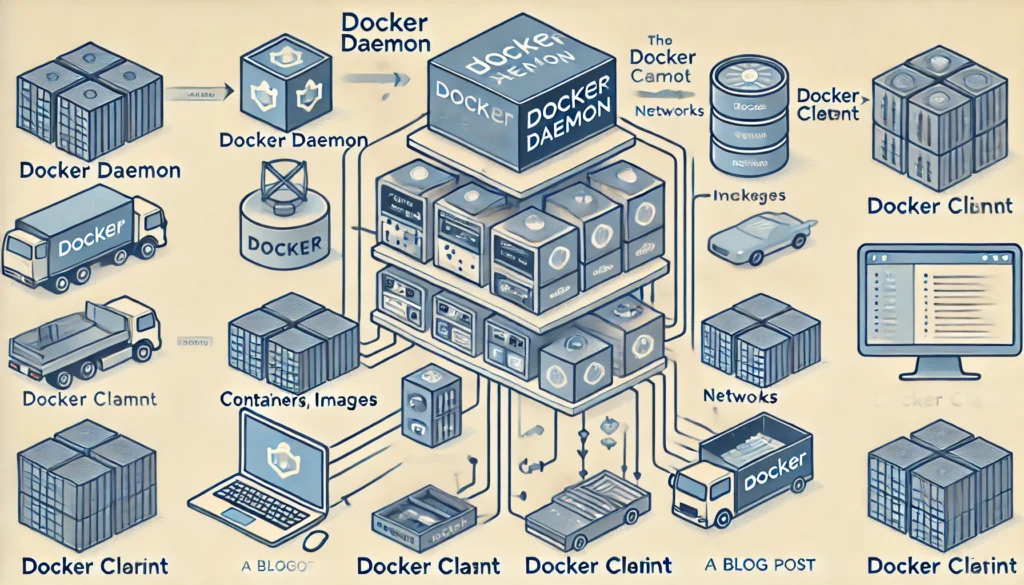

Understanding the Docker Daemon Architecture

The Docker Daemon is a critical component of Docker’s architecture. It runs in the background on the host machine and is responsible for managing Docker resources, including containers, images, volumes, and networks. The Docker Daemon works alongside the Docker Client, processing commands received from the Client to handle all Docker-related tasks.

Key Functions of the Docker Daemon

- Managing Containers: The Docker Daemon is responsible for creating, starting, stopping, and deleting containers based on the Docker images. When you run a command like

docker run, the Daemon takes the instructions from the Docker Client and executes them by managing container resources. - Handling Docker Images: The Daemon also manages images, allowing you to pull, build, and store Docker images. When you build a Docker image with

docker build, it’s the Daemon that actually builds the image based on the instructions in your Dockerfile. - Networking: The Docker Daemon manages networks, allowing containers to communicate with each other and external networks. It creates network bridges and assigns IP addresses to containers, enabling seamless communication.

- Volume Management: The Daemon handles volumes to ensure data persistence across containers. When a container mounts a volume, the Daemon manages and maintains this storage.

How the Docker Daemon and Docker Client Interact

The Docker Daemon and Docker Client communicate using a REST API over Unix sockets or a network interface. The Docker Client sends commands (like docker pull, docker run) to the Daemon, which then executes these commands. This client-server model allows Docker to be run in distributed environments, with the Client and Daemon possibly residing on different machines.

Why the Docker Daemon is Essential

The Docker Daemon is the backbone of Docker, handling all the heavy lifting behind container orchestration, resource management, and networking. Without the Daemon, Docker wouldn’t be able to create or manage containers, build images, or establish networks. It ensures that Docker operations run smoothly and efficiently on the host machine, making Docker a robust and scalable platform for containerized applications.

Conclusion

Docker has reshaped the landscape of software development, providing a versatile, efficient, and consistent way to deploy applications. From microservices and CI/CD pipelines to data processing and legacy modernization, Docker enables developers and operations teams to achieve faster deployment, greater scalability, and higher resource efficiency. By mastering Docker, you can simplify your development workflow, reduce infrastructure costs, and make your applications more portable and resilient.

Ready to dive deeper? Explore Docker’s official documentation, or try using Docker Compose and Kubernetes to manage multi-container applications. With Docker, the potential is limitless.