How Caching Works: Memory, Disk, and Distributed Caches Explained

In today’s digital world, speed and efficiency are everything. Whether you’re browsing a website, streaming a video, or using an app, the expectation is instant performance. Behind the scenes, one of the key technologies that make this possible is caching.

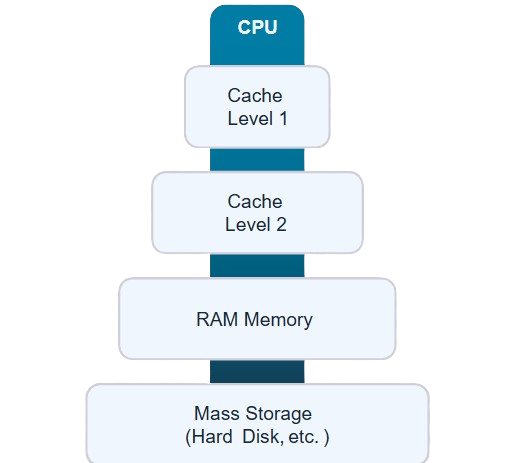

Caching, often referred to as memory caching, is a technique that allows computer applications to temporarily store data in a computer’s memory for quick retrieval. The temporary storage area for this data is known as the cache. Caching frequently accessed data makes applications more efficient, since memory retrieval is much faster than accessing solid-state drives (SSDs), hard disk drives (HDDs), or network resources.

Caching is especially efficient when the application exhibits a common pattern of repeatedly accessing data that was previously accessed. It’s also useful for storing time-consuming calculations. By saving the result in a cache, the system avoids recalculating the same thing over and over again, dramatically improving performance.

Let’s break down how caching works and the different types of caches, memory, disk, and distributed that power modern systems.

WHAT IS CACHING?

Memory caching is a small portion of main memory (RAM) set aside as a temporary storage area for frequently accessed data. It improves application performance by reducing the time it takes to fetch data from slower storage media like hard disk drives or networks.

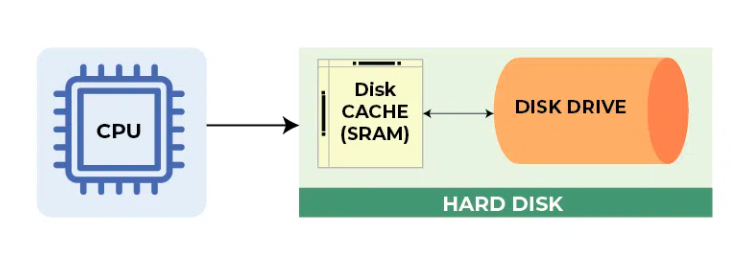

DISK CACHE

A disk cache is a portion of RAM used to store data that has recently been read from or written to a disk, such as an HDD or SSD. Disk caching reduces the number of physical read/write operations, thereby improving overall system performance.

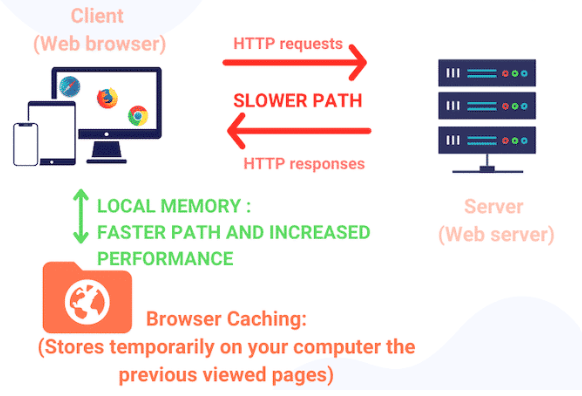

BROWSER CACHE

A web browser’s cache is a temporary storage area for web content—such as HTML pages, images, and other media. When a user visits a webpage, their browser stores a copy of the content locally. On subsequent visits, the browser loads the cached version instead of re-downloading it, significantly speeding up page load times.

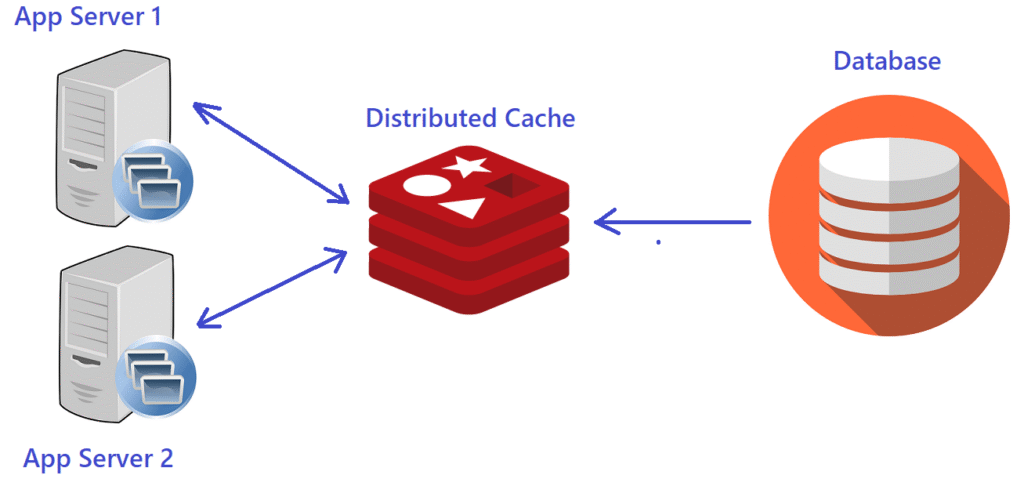

DISTRIBUTED CACHE

A distributed cache is a shared cache used by multiple servers within a network. It stores frequently accessed data across various nodes, reducing the need to repeatedly retrieve it from databases or slower storage. Distributed caching enhances performance, scalability, and reliability in large-scale applications—especially in cloud and microservices environments.

DEEP DIVE: MEMORY, DISK, AND DISTRIBUTED CACHES

1. Memory Caches (In-Memory Caching)

What it is: Stores data directly in RAM (Random Access Memory).

Why use it: RAM access times are measured in nanoseconds, making memory caching the fastest option for data retrieval.

- Local memory: A web application server storing session data in its own memory.

- In-memory cache systems: Tools like Redis and Memcached.

PROS

- Ultra-fast reads/writes.

- Reduces repeated computation or database queries.

- Great for data that changes frequently but doesn’t need permanent storage.

CONS

- Limited by the machine’s RAM capacity.

- Data is volatile—lost if the system crashes or restarts (unless persistence is configured).

Best use cases: User sessions, authentication tokens, real-time leaderboards, frequently accessed small datasets.

2. Disk Caches

What it is: Stores cache data on physical storage like HDDs or SSDs instead of volatile RAM.

Why use it: Slower than memory, but allows much larger datasets to be cached cost-effectively.

- Web browsers storing images, scripts, and pages locally for faster page loads.

- Operating systems caching file system metadata on disk.

PROS

- Persistent: Data remains available even after a system restart.

- Larger storage capacity than RAM.

- Can handle bulkier datasets (e.g., video caching, large query results).

CONS

- Slower access times compared to RAM.

- Can degrade SSD/HDD lifespan with frequent writes.

Best use cases: Content delivery (videos, images), offline availability of files, OS-level file caching, application boot optimization.

3. Distributed Caches

What it is: Cache data is stored across multiple servers or nodes in a cluster.

Why use it: Solves the scalability and consistency problems of local caches in large-scale, distributed systems.

- Redis Cluster, Hazelcast, Apache Ignite, Amazon ElastiCache.

- Used heavily in cloud applications and microservices architectures.

PROS

- Scales horizontally by adding more nodes.

- High availability and fault tolerance (replication & sharding).

- Shared cache across multiple applications or instances.

CONS

- Higher network latency compared to in-memory caches.

- Requires cluster management and monitoring.

- More complex to configure than local caching.

Best Use Cases

- Large-scale e-commerce platforms (storing product catalog data).

- Microservices needing shared state (session storage, API rate limiting).

- High-traffic websites with millions of concurrent users.

CACHING AND SYSTEM PERFORMANCE

Caching contributes significantly to a computer system’s overall performance by reducing the time it takes to access data and instructions. When a computer program needs to retrieve data, it first checks the cache to see if the information is already stored there. If the data is present (a cache hit), the program can access it much faster than fetching it from slower storage media such as hard drives, SSDs, or networked storage. This reduces latency and accelerates program execution, leading to smoother user experiences.

By serving frequently accessed data directly from the cache, the workload on slower storage devices and networks is reduced. This not only improves the performance of individual applications but also enhances the overall efficiency of the system. Devices and network resources are freed up to handle other operations, which leads to better responsiveness and stability across the system.

For example:

- A web browser cache allows previously visited pages, images, and scripts to load quickly without repeatedly downloading them.

- A CPU cache ensures instructions and data needed for computation are readily available, reducing the number of expensive memory fetches.

- Database caching reduces the number of repetitive queries sent to a database, significantly improving application speed and reducing server strain.

In short, caching improves system performance by minimizing repeated read/write operations, optimizing hardware utilization, and enabling programs to run more efficiently. Whether in personal devices or large-scale distributed systems, caches act as a critical performance booster.

EXAMPLES OF CACHING / USE CASES

Caching can be leveraged in a variety of specific use cases such as:

- Database acceleration – Speeds up access to frequently queried data.

- Query acceleration – Improves the response time of repeated or complex queries.

- Web/mobile application acceleration – Enhances user experience by reducing load times.

- Cache-as-a-service – Provides caching solutions as a managed service for scalability and efficiency.

- Web caching – Stores frequently accessed web pages to improve response times and reduce server load.

- Content delivery network (CDN) caching – Delivers content faster to users by storing cached data closer to their geographical location.

- Session management – Maintains user session data efficiently, improving application performance.

- Microservices caching – Reduces latency and overhead in distributed microservices-based architectures

THE CHALLENGE OF DISTRIBUTED DATA SYSTEMS

While distributed caching is powerful, it comes with several challenges. One of the biggest is that your data is often scattered across multiple backend systems. Each system may store different types of information, making it difficult to maintain a single, coherent view of the data.

You need a caching solution that can provide context for these different data types, ensuring that the application can understand and correctly interpret the data. This is especially important when you require more information than what is already stored in the cache. Without proper context and synchronization, cached data can become inconsistent or stale, which may lead to incorrect results or unexpected behavior.

Other challenges in distributed caching include:

- Cache consistency – Ensuring all nodes reflect the latest data changes.

- Replication and synchronization – Keeping cached data up-to-date across multiple servers.

- Network overhead – Retrieving data from multiple nodes can introduce latency.

- Fault tolerance – Handling node failures without losing cached data.

Distributed caching systems, therefore, require careful design and monitoring to maintain reliability and performance while serving large-scale, multi-server applications.

LIMITATIONS IN DATA PROCESSING AND ANALYSIS WITH CACHES

Unfortunately, caches can only retrieve stored information and cannot manipulate, organize, calculate, or analyze data. For your company, this means missed revenue opportunities that can easily slip away within your technology infrastructure. The delay caused by retrieving data from the cache and processing it through multiple backend systems results in a significant loss of actionable information.

BENEFITS OF CASHING

- Speed – Rapid access to frequently used data

- Cost Efficiency – Reduces load on databases and APIs

- Scalability – Enables applications to handle more users

- Reduced Latency – Better user experience

- Resilience – Provides fallback in case of temporary backend failures

CONCLUSION

Caching is one of the most powerful techniques for improving the performance, scalability, and responsiveness of modern applications. By temporarily storing frequently accessed data, caching reduces latency, minimizes repeated computations, and lowers the workload on slower storage systems.

We explored the different types of caches—memory, disk, CPU, browser, and distributed caches—and how each is suited for specific use cases. Memory caches provide lightning-fast access for hot data, disk caches allow persistence for larger datasets, and distributed caches enable scalable solutions for multi-server and cloud-based applications.

While caching brings tremendous benefits, it also introduces challenges such as stale data, cache invalidation, and distributed consistency issues. A well-designed caching strategy, combined with careful monitoring, can help applications maximize speed and efficiency while mitigating these risks.

In practice, combining memory, disk, and distributed caching layers often provides the best balance between performance, scalability, and reliability. Whether you are building a simple web application or a large-scale microservices architecture, understanding and leveraging caching is essential for delivering fast, reliable, and efficient software.

- Caching speeds up data access and improves user experience.

- Choose the right type of cache based on data size, persistence, and access patterns.

- Monitor cache performance and implement proper eviction and invalidation policies.

- Layering caches (memory → disk → distributed) can deliver optimal results.

Ultimately, caching is more than a performance optimization—it’s a fundamental tool that can transform how applications handle data, enabling faster responses, reduced costs, and better scalability.